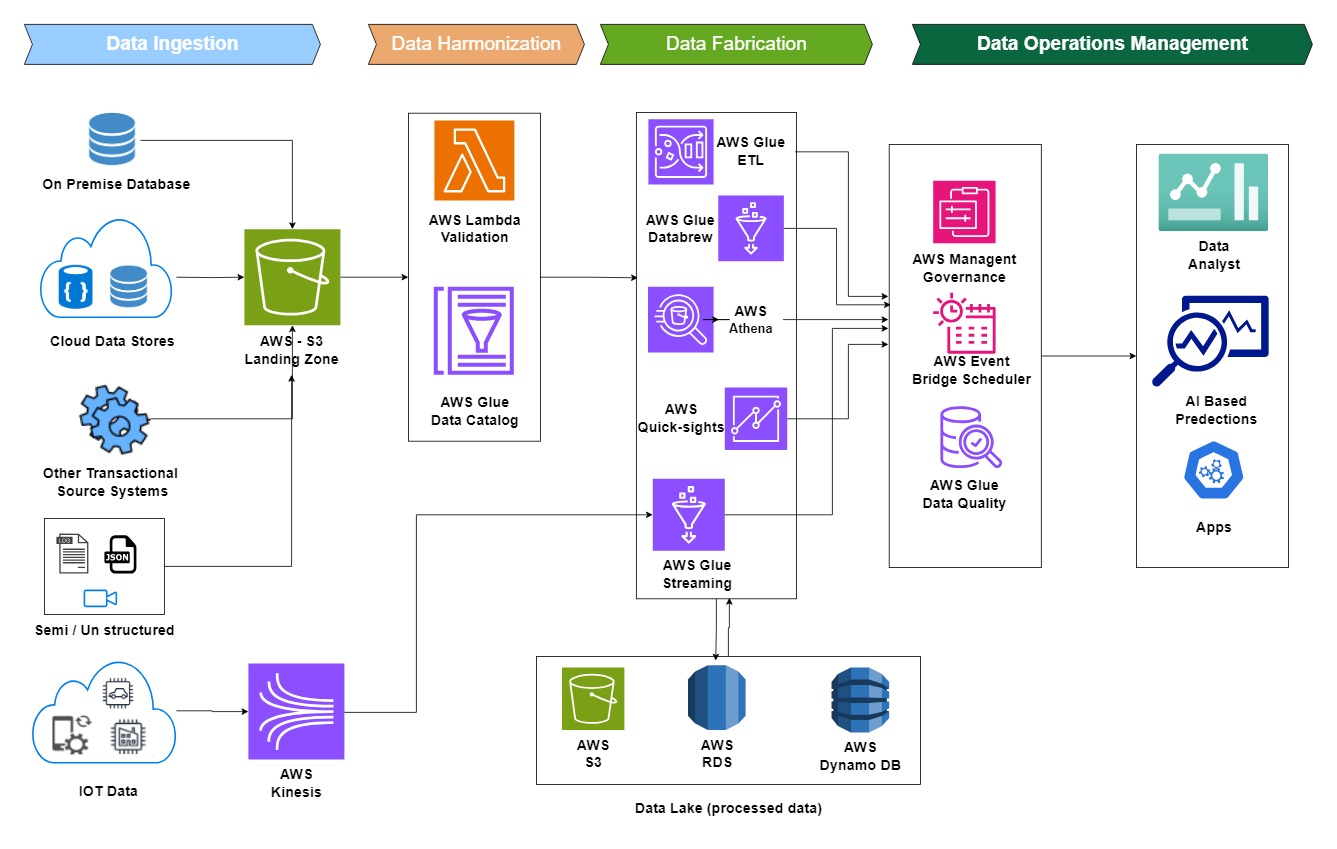

Objective

To efficiently extract, transform, and load data from various sources, ensuring high data quality and governance.

Focus

Managing data from On-Premise Databases, Cloud Data Stores, IoT devices, and other sources to provide valuable insights and predictions.

Stage 1 – Data Ingestion

- On-Premise Databases: Traditional databases within the organization, such as SQL Server or Oracle.

- Cloud Data Stores: Storage solutions hosted on cloud platforms, including data warehouses and cloud-native databases.

- Other Transactional Source Systems: Systems generating transactional data like CRM or ERP systems.

- Semi/Unstructured Data: Data in formats such as logs, social media, documents, which require different handling methods.

- IoT Data: Real-time data from sensors, smart devices, and industrial equipment.

Flow Detail

- IoT Data is directly fed into AWS Kinesis for real-time data streaming and analysis.

- All other data types are first stored in a Landing Zone using AWS S3 for initial storage and staging.

Stage 2 – Data Harmonization

- AWS Lambda: Serverless compute service used to trigger data validation processes and ensure that data meets predefined standards.

- AWS Glue Data Catalog: A central repository for storing metadata, making it easier to manage, discover, and conform datasets.

- Data Validation: Ensuring data consistency and accuracy before moving it to the next stage. Lambda functions automate the validation process.

Purpose

- Standardizes data formats.

- Maintains schema consistency.

- Validates data against business rules to ensure data quality.

Stage 3 – Data Fabrication

- AWS Glue ETL: Extracts, transforms, and loads data from various sources into formats suitable for analysis. It automates complex tasks like data merging, filtering, and enrichment.

- AWS Glue DataBrew: A visual tool that helps clean and normalize data without coding. Ideal for data analysts to interact directly with the data.

- AWS Athena: An interactive query service that allows SQL-based analysis of data stored in AWS S3 without the need for data movement.

- AWS QuickSight: BI tool that provides interactive dashboards and visualization directly from cleaned data.

- AWS Glue Streaming: Processes real-time streaming data from IoT devices directly through AWS Kinesis. Suitable for handling continuous data flow.

- All cleaned and extracted data is stored in the Data Lake on AWS S3, with structured data also stored in AWS RDS (Relational Database Service) and AWS DynamoDB (NoSQL Database Service).

Stage 4 – Data Operations Management

Data Management

- AWS Management & Governance: Tools like AWS Config and CloudTrail ensure compliance, security, and auditing of data handling processes.

- AWS EventBridge Scheduler: Manages the scheduling and automation of ETL tasks, ensuring timely execution.

- AWS Glue Data Quality: Ensures that the data being delivered meets predefined quality standards, checking for accuracy, consistency, and completeness.

- Data Delivery (Output): Dashboards for Data Analysts: Interactive dashboards via AWS QuickSight for data visualization and reporting.

- AI-Based Predictions: Machine learning models use processed data to provide predictive analytics and insights.

- Applications: Data is delivered to business applications for operational decision-making and real-time updates.

Objective

- To ensure secure, compliant, and high-quality data handling.

- To enable informed decision-making through accurate reporting and predictive analytics.